Kubernetes Service Mesh Definition

Cloud native applications frequently run in containers as part of a distributed microservices architecture. Kubernetes deployments have become the de-facto standard for orchestration of these containerized applications.

Microservices sprawl, a kind of exponential growth in microservices, is one unintended outcome of using microservices architecture. This kind of growth presents challenges within a Kubernetes cluster surrounding authentication and authorization, routing between multiple versions and services, encryption, and load balancing.

A service mesh is a mesh of Layer 7 proxies, not a mesh of services. Microservices can use a service mesh to abstract the network away, resolving many of the challenges arising from talking to remote endpoints within a Kubernetes cluster. Building on Kubernetes allows the service mesh to abstract away how inter-process and service to service communications are handled, as containers abstract away the operating system from the application.

What is Kubernetes Service Mesh?

A Kubernetes service mesh is a tool that inserts security, observability, and reliability features to applications at the platform layer instead of the application layer.

Service mesh technology predates Kubernetes. However, growing interest in service mesh solutions is directly related to the proliferation of Kubernetes-based microservices and a resulting interest in Kubernetes service mesh options.

Microservices architectures are heavily network reliant. Service mesh manages network traffic between services.

There are other ways to manage this network traffic, but they are less sustainable than service mesh because they demand more operational burden in the form of error-prone, manual labor from devops teams. Service mesh on Kubernetes achieves the same goal in a much more scalable manner.

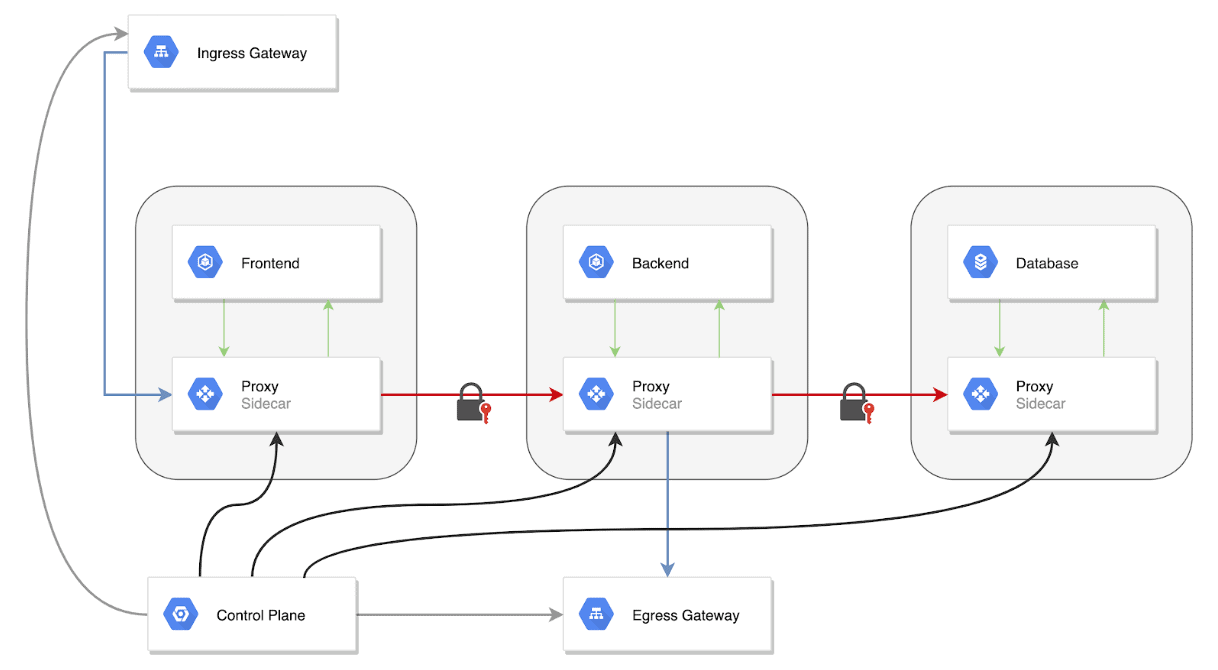

The service mesh in Kubernetes is typically implemented as a set of network proxies. Deployed alongside a “sidecar” of application code, these proxies serve as an introduction point for service mesh features and manage communication between the microservices. The data plane of the Kubernetes service mesh is made up by the proxies, which the control plane controls.

Kubernetes and service mesh architectures arose as cloud native applications flourished. Hundreds of services may comprise any given application, and there may be thousands of instances of each service. Each of those instances demand dynamic scheduling as they change rapidly, which is where Kubernetes comes in.

Clearly, this is a highly complex system of service to service communications, but it’s also a basic, normal part of runtime behavior for a standard application. To ensure the app is reliable, secure, and performs well end-to-end, insightful management is essential.

How Does Kubernetes Service Mesh Work?

Distributed applications in any architectural environment, including the cloud, have always required rules to control how their requests get from place to place. A Kubernetes service mesh or any type of service mesh does not introduce new logic or functionality to the runtime environment. Instead, Kubernetes network service mesh abstracts the logic that controls service to service communications to a layer of infrastructure and out of individual services.

Service mesh layers atop Kubernetes infrastructure to render inter-service communications over the network reliable and safe. Kubernetes service mesh works similarly to how a tracking and routing service for shipped mail and packages does. It tracks routing rules and directs traffic and package routes dynamically based on those rules to ensure receipt and accelerate delivery.

The components of a service mesh include a data plane and a control plane. Lightweight proxies distributed as sidecars comprise the data plane. Users can deploy these proxy technologies to build a service mesh in Kubernetes. The proxies in Kubernetes are in every application-adjacent pod and are run as cycles.

The control plane configures the proxies, contains the policy managers, and issues the TLS certificates authority. It can also include the ability to perform tracing and can collect other metrics such as telemetry and can run other service mesh implementations.

In this way, Kubernetes service mesh enables users to separate an application’s business logic from policies controlling observability and security, allowing them to connect microservices and then secure and monitor them moving forward.

Service mesh in Kubernetes enables services to detect each other and communicate. It also uses intelligent routing to control API calls and the flow of traffic between endpoints and services. This further enables canaries or rolling upgrades, blue/green, and other advanced deployment strategies.

Service mesh on Kubernetes architectures also enables secure communication between services. For example, with Kubernetes network service mesh users can enforce communication policies that deny or allow specific types of communication—such as enforcing a policy that denies production services access to development environment client services.

Various Kubernetes service mesh options enable users to observe and monitor even highly distributed microservices systems. Kubernetes service mesh also frequently integrates with other tracing and monitoring tools to enable improved discovery and visualization of API latencies, traffic flow, dependencies between services, and tracing.

This level of functionality is essential to monitoring complex cloud native applications and the distributed microservices environments that comprise them. Observability and granular insights are critical for a higher level of operational control.

What is Istio Kubernetes Service Mesh?

Istio is an open source, Kubernetes service mesh example that has become the service mesh of choice for many major tech businesses such as Google, IBM, and Lyft. Istio shares the data plane and control plane that all service meshes feature, and is often made up of Envoy proxies. These proxies are deployed within each Kubernetes pod container as sidecars, establishing connections to other services and moderating communications with them.

The rules for managing this communication must be configured on the data plane. This component of the Istio service mesh is responsible for traffic management, protocol-specific fault injection, and several types of Layer 7 load balancing. This application layer load balancing stands in contrast to Kubernetes load balancing, which is just on Layer 4, the transport layer.

Other components collect metrics on traffic and respond to various data plane queries such as access control, authentication and authorization, or quota checks. They can also interface with monitoring and logging systems, depending on which adapters are enabled, and provide encryption and authentication policies and enforcement. For example, Istio supports TLS authentication and role-based access control.

Many other tools integrate with Istio to expand its capabilities.

Benefits of Service Mesh in Kubernetes

Microservices architecture has been a key step in the move towards cloud native architecture. While it provides flexibility, microservices architecture is also inherently complex. Container services can manage and deploy microservices architectures, but as they grow and sprawl, insight becomes more limited. This presents the main limitation for cloud native architecture, which demands deep insight for traffic management, security, and other critical functions.

A service mesh aids in resolving some of this complexity by providing the ability to use the services of multiple stack layers in a single infrastructure layer—all without requiring integration or code modification by your application developers. This makes communication between services faster and more reliable. Service mesh in Kubernetes also offers observability in the form of logging, tracing, and monitoring; granular traffic management; security in the form of encryption and authentication and authorization; and failure recovery.

In practice, using a Kubernetes service mesh makes it easier to implement security and encryption between services and reduces the burden on devops teams. A service mesh also makes tracing a service latency issue simpler. And although different service meshes provide different features, common capabilities include:

- API (Kubernetes Custom Resource Definitions (CRD), programmable interface)

- Communication resiliency (circuit-breaking, retries, rate limiting, timeouts)

- Load balancing (consistent hashing, least request, zone/latency aware)

- Observability (alerting, Layer 7 metrics, tracing)

- Routing control (traffic mirroring, traffic shifting)

- Security (authorization policies, end-to-end mutual TLS encryption, service level and method level access control)

- Service discovery (distributed cache)

Application teams can implement a service mesh and deploy common implementations that fulfill standard requirements. The use of service meshes is related to the basic principle behind Kubernetes: it is a standard interface that runs applications and meets related needs.

What is Kubernetes Service Mesh?

The VMware NSX Advanced Load Balancer integrates with the Tanzu Service Mesh (TSM) which is built on top of Istio with value added services. By expanding on the TSM solution, VMware NSX Advanced Load Balancer offers north-south connectivity, security, and observability inside and across Kubernetes clusters, and multiple sites and clouds. In addition, enterprises are able to connect modern Kubernetes applications to traditional application components in VM environments and clouds, secure transactions from end-users to the application, and seamlessly bridge between multiple environments.

For more on the actual implementation of load balancing, security applications and web application firewalls check out our Application Delivery How-To Videos.