Avi Kubernetes Operator Deployment Guide

Overview

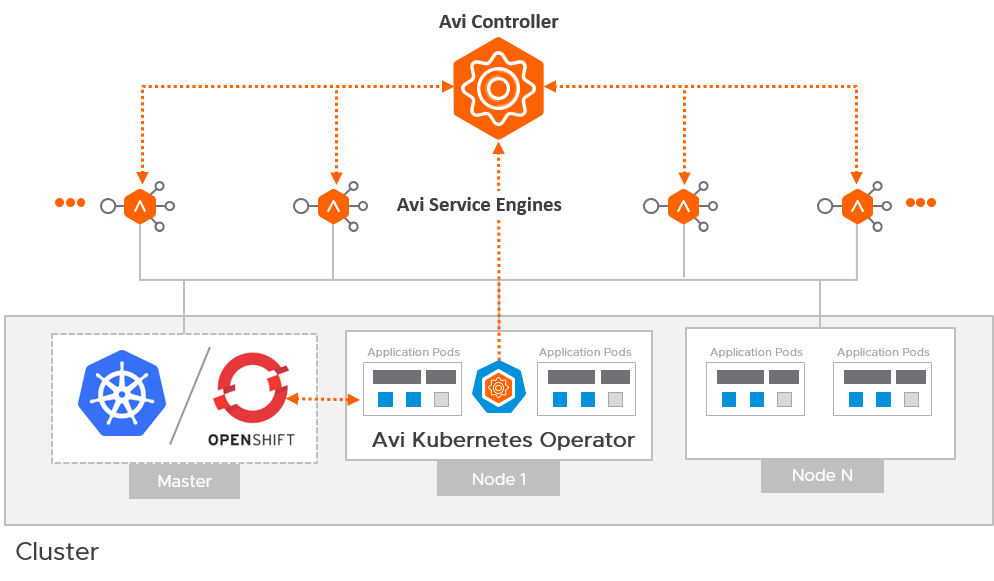

The Avi Kubernetes Operator (AKO) is an operator which works as an Ingress controller and performs Avi-specific functions in a Kubernetes/ OpenShift environment with the Avi Controller. It translates Kubernetes/ OpenShift objects to Avi Controller APIs.

This guide helps you understand the architectural overview and design considerations for AKO deployment.

Architecture

The Avi Deployment in Kubernetes/ OpenShift for AKO comprises of the following main components:

- The Avi Controller

- The Service Engines (SE)

- The Avi Kubernetes Operator (AKO)

The Avi Controller

The Avi Controller which is the central component of the Avi architecture is responsible for the following:

- Control plane functionality like the:

- Infrastructure orchestration

- Centralized management

- Analytics dashboard

- Integration with the underlying ecosystem for managing the lifecycle of the data plane (Service Engines).

The Avi Controller does not handle any data plane traffic.

In Kubernetes/ OpenShift environments, the Avi Controller is deployed outside the Kubernetes/ OpenShift cluster, typically in the native type of the underlying infrastructure. However, it can be deployed anywhere as long as connectivity and latency requirements are satisfied.

The Avi Service Engines

The SEs implement data plane services of load balancing. For example, Web Application Firewall, DNS/GSLB, etc.

In Kubernetes/ OpenShift environments, the SEs are deployed external to the cluster and typically in the native type of the underlying infrastructure.

The Avi Kubernetes Operator (AKO)

AKO is an Avi pod running in Kubernetes that provides an Ingress controller and Avi-configuration functionality.

AKO remains in sync with the required Kubernetes/ OpenShift objects and calls the Avi Controller APIs to deploy the Ingresses and Services via the Avi Service Engines.

AKO is deployed as a pod via Helm.

Avi Cloud Considerations

Avi Cloud Type

The Avi Controller uses the Avi Cloud configuration to manage the SEs. This Avi Cloud is usually of the underlying infrastructure type, for ex. VMware vCenter Cloud, Azure Cloud, Linux Server Cloud etc.

Note: This deployment in Kubernetes/ OpenShift does not use the Kubernetes/ OpenShift cloud types. The integration with Kubernetes/ OpenShift and application-automation functions are handled by AKO and not by the Avi Controller.

Multiple Kubernetes/ OpenShift Clusters

A single Avi Cloud can be used for integration with multiple Kubernetes/ OpenShift clusters, with each cluster running its own instance of AKO. Clusters in the clusterIP mode are separated on the data plane through unique SE groups per cluster.

IPAM and DNS

The IPAM and DNS functionality is handled by the Avi Controller via the Avi cloud configuration.

Refer to the Service Discovery Using IPAM and DNS article for more information on supported IPAM and DNS types per environment.

Service Engine Groups

AKO supports a separate SE group per Kubernetes/OpenShift cluster. Each cluster will need to be configured with a separate SE group. However, multiple SE groups within the same cluster is not supported. As a best practice, it is recommended to use non-default SE groups for every cluster. SE group per cluster is not a requirement if AKO runs in the nodeport mode.

Avi Controller Version

AKO version 1.4.1 supports Avi Controller releases 20.1.4 and above only.

Network Considerations

Avi SE Placement / Pod Network Reachability

With AKO, the service engines are deployed outside the cluster. To be able to load balance requests directly to the pods, the pod CIDR must be routable from the SE. Depending on the routability of the Pod CNI used in the cluster, AKO can route using the following options:

Pods are not Externally Routable – Static Routing

For CNIs like Canal, Calico, Antrea, Flannel etc., the pod subnet is not externally routable. In these cases, the CNI assigns a pod CIDR to each node in the Kuberntes cluster. The pods on a node get IP assigned from the CIDR allocated for that node and is routable from within the node. In this scenario, the pod reachability depends on where the SE is placed.

If SE is placed on the same network as the Kubernetes/ OpenShift nodes, you can turn on static route programming in AKO. With this, AKO syncs the pod CIDR for each Kubernetes/ OpenShift node and programs static route on the Avi Controller for each Pod CIDR with the Kubernetes/ OpenShift node IP as the next hop. Static routing per cluster uses a new label-based routing scheme. No additional user configuration is required for this label-based scheme, however the upgrading AKO will be service impacting, requiring an AKO restart.

Pods are not externally routable – NodePort

In cases where direct load-balancing to the pods is not possible, NodePort based services can be used as the pool members in the Avi virtual service as end points. For this functionality, configure the services referenced by Ingresses/Routes as type NodePort and set the configs.serviceType parameter to enable NodePort based Routes/Ingresses. The nodeSelectorLabels.key and nodeSelectorLabels.value parameters are specified during the AKO installation to select the required Nodes from the cluster for load balancing. The required nodes in the cluster need to be labelled with the configured key and value pair.

Pod Subnet is Routable

For CNIs like NSX-T CNI, AWS CNI (in EKS), Azure CNI (in AKS) etc., the pod subnet is externally routable. In this case no additional configuration is required to allow SEs to reach the Pod IPs. Set Static Route Programming to Off in the AKO configuration. SEs can be placed on any network and will be able to route the pods.

To know more about the CNIs supported, click here.

Deployment Modes

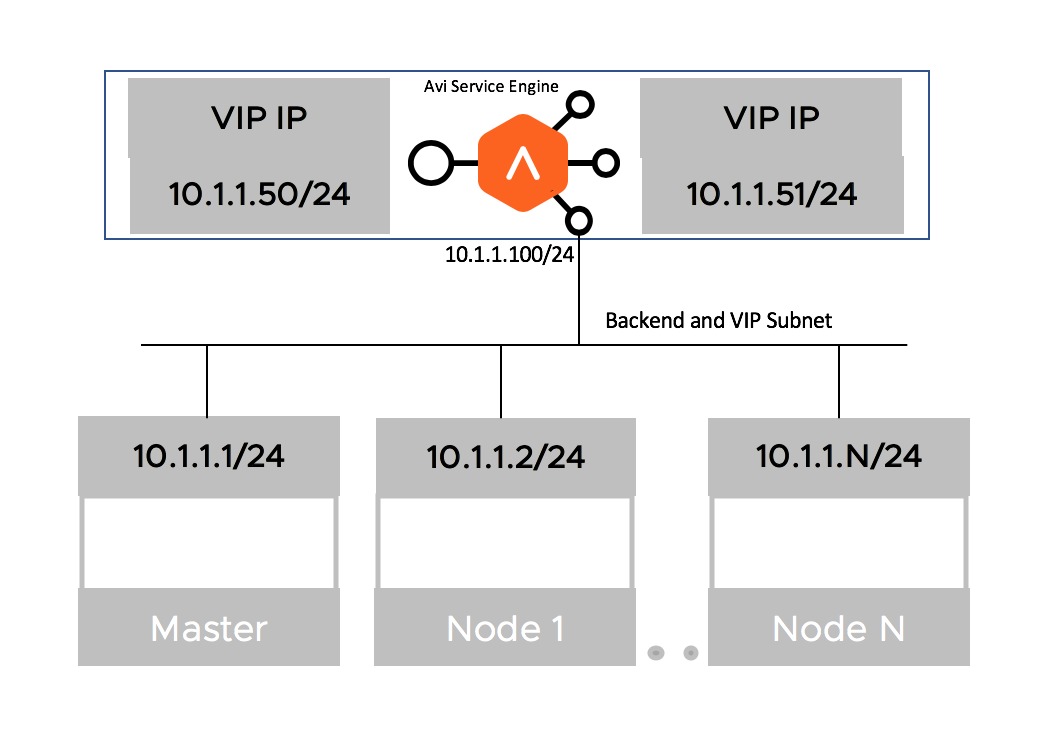

Single Arm Deployment

The deployment in which the virtual IP (VIP) address and the Kubernetes/ OpenShift cluster are in the same network subnet is called a Single Arm Deployment.

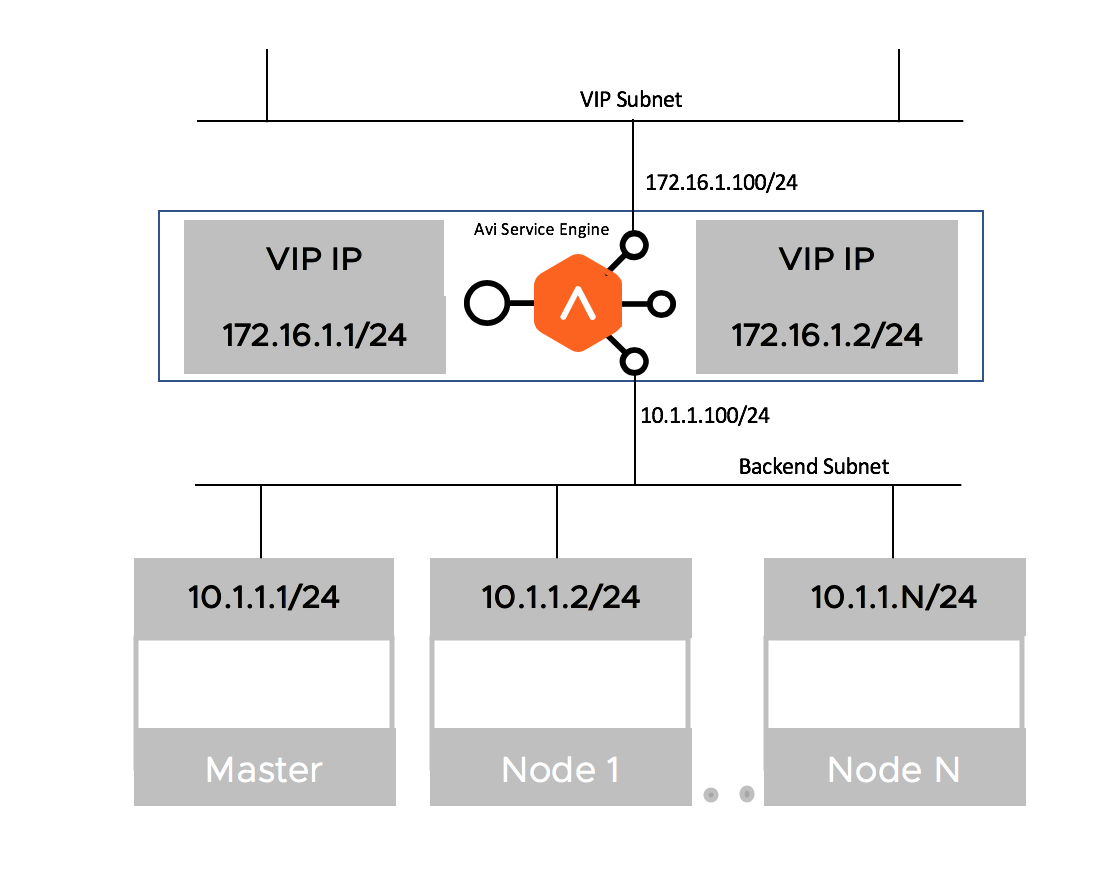

Two-Arm Deployment

When the virtual IP (VIP) address and the Kubernetes/ OpenShift cluster are in different network subnets, then the deployment is a Two-Arm deployment

AKO supports both Single-Arm and Two-Arm deployments with vCenter Cloud in write-access mode.

Annotations

AKO version 1.4.1 does not support annotations. However, the HTTPRule and HostRule CRDs can be leveraged to customize the Avi configuration. Refer to the Custom Resource Definitions article for more information on what parameters can be tweaked.

Multi-Tenancy

AKO supports multi-tenancy.

AKO Support

Refer to the the Compatibility Guide for information on supportability of features and environments with AKO.

Document Revision History

| Date | Change Summary |

|---|---|

| August 31, 2021 | Updated the support of autoFQDN for AKO version 1.5.1 |

| February 12, 2021 | Updated the support of autoFQDN for AKO version 1.3.3 |

| December 18, 2020 | Updated the Design Guide for AKO version 1.3.1 |

| September 18, 2020 | Published the Design Guide for AKO version 1.2.1 |

| July 20, 2020 | Published the Design Guide for AKO version 1.2.1 (Tech Preview) |