AKO Deployment in GKE, AKS, and EKS

Overview

Google Kubernetes Engine (GKE) provides a managed environment for deploying, managing, and scaling your containerized applications using the Google infrastructure.

Amazon Elastic Kubernetes Service (EKS) is a managed Kubernetes service that makes it easy for you to run Kubernetes on AWS and on-premises.

Azure Kubernetes Service (AKS) is a fully managed Kubernetes service which offers server-less Kubernetes, an integrated continuous integration and continuous delivery (CI/CD) experience and enterprise-grade security and governance.

This article explains the AKO deployment in GKE, EKS, and AKS.

In all the deployments the cluster is deployed such that Pod IP addresses are natively routable.

Set AKOSettings.disableStaticRouteSync to true in AKO for each of the deployment.

Deploying AKO in GKE

To deploy AKO in GKE,

-

Ensure that the pods on the GKE cluster should be reachable to the Avi Controller.

-

Ensure that the pod IP addresses should be natively routable within the cluster’s VPC network and other VPC networks connected to it by VPC network peering.

Note: VPC-native clusters in GCP support this configuration by default and this should be enabled for the cluster creation.

-

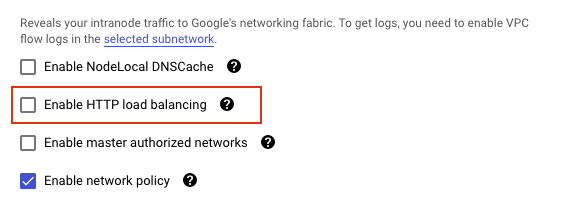

Ensure that the following parameters are configured in the GKE cluster as below:

- The option

Enable HTTP load balancingis unchecked since the Google Cloud load balancer with Kubernetes does not have to be used.

Enable network policy(optional) can be selected to install Calico CNI, GKE has built-in support for Calico.

- The option

Deploying AKO in EKS

To deploy AKO in EKS,

-

Ensure that the EKS cluster has at least two subnets configured on it from two different zones in the same VPC.

-

Amazon EKS works with the Project Calico network policy engine to provide fine grained networking policies for your Kubernetes workloads.

Note: Set

AKOSettings.cniPluginto calico in AKO. (optional). -

By default the EKS cluster does not have any nodes configured on it. A new nodegroup/autoscaling group needs to be created in the same subnet as EKS and associate it to the cluster.

-

You can spin up the Controller on a different subnet. For the EKS nodes to be able to connect to the Controller, a custom security group needs to be added on the nodes which will allow access to the Controller subnet.

-

AKO supports Multi-AZ VIPs for AWS. VIPs can be in single subnet or multiple subnets across multiple AZs.

-

To configure MultiVIP, add the desired subnet ids to

NetworkSettings.vipNetworkList. A Single subnet ID invipNetworkListsignifies single VIP mode. Such configuration serves as a global configuration for MultiVIP. For example#values.yaml [...] NetworkSettings: vipNetworkList: - networkName: subnet-1 - networkName: subnet-2 [...] -

To configure a subset of virtual services with multiple VIPs, use the AviInfraSetting CRD. The desired subnets can then be specified under ` `. Such configuration overrides the global configuration. For example

#multivip-cr.yaml apiVersion: ako.vmware.com/v1alpha1 kind: AviInfraSetting metadata: name: multivip-cr namespace: multivip-namespace spec: network: names: - subnet-1 - subnet-2

NOTE: When configuring MultiVIP, make sure that all subnets are capable of VIP allocation. Failure in allocating even a single vip (for example, in case of IP exhaustion) will result in complete failure of the entire request. This is same as VIP allocation failures in single VIP.

Deploying AKO in AKS

To deploy AKO in AKS,

- In AKS, it is not possible to disable the Azure Load Balancer when service of

type: LoadBalanceris created.-

To disable the Public IP creation/use internal load balancer by AKS, use the annotation

service.beta.kubernetes.io/azure-load-balancer-internal: true . -

By default, AKS will provision a Standard SKU Load Balancer to be set up and used for egress. If public IPs are disallowed or additional hops are required for egress, refer to the Customize cluster egress with a User-Defined Route article.

-